It’s no secret that I’m highly skeptical about the hype surrounding “artificial intelligence” in general, and more specifically, its use in marketing, publishing, and journalism. That skepticism is hard-earned from a lifetime of first-hand experience with legitimate technology-driven disruptions, along with exponentially more vaporware and broken promises along the way.

I’m no technophobe. My entire career has been built on staying one step ahead of technology as “an early tester, late adopter.” I haven’t always been right, but I’ve never been egregiously wrong, either, because I don’t fall for the latest new shiny “pivot to…” hype. I’ve learned to read between the lines, follow the money, analyze potential outcomes, and — most importantly — question everything.

I can’t possibly experiment firsthand with every new shiny, of course, so I’m also a firm believer in the power of a “personal learning network” — a curated group of people whose expertise and credibility I can trust to help inform my own understanding of things I’m interested in, or leery of. Blogs and social networks helped expand PLNs well beyond what was possible 20 years ago, but I still believe nothing beats a well-researched and well-written book on a topic you want to learn more about.

Don’t Accept the Hype

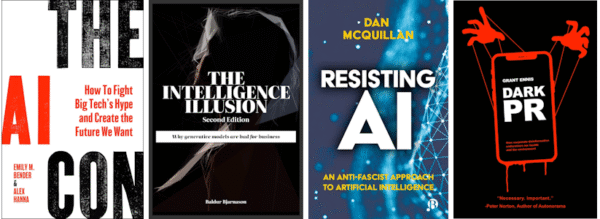

Fortunately, there are plenty of people much smarter than me who are pushing back on the AI hype machine every day. Some have written excellent books that have helped inform and refine my own natural skepticism, each of which I highly recommend to anyone who’s trying to develop their own understanding of what “artificial intelligence” really is — and isn’t — and where it fits, or doesn’t, into their own world.

The following are listed in order of my opinion about their accessibility to a general audience. The fourth is an ideal pairing for any (or all) of the other three, but it’s also a must-read on its own merits, regardless of what you think about AI.

The AI Con: How to Fight Big Tech’s Hype and Create the Future We Want by Emily M. Bender & Alex Hanna

“AI hype reduces the human condition to one of computability, quantification, and rationality… Viewing humans as a kind of machine has far-reaching implications for the field of computing and how AI is hyped up in broader circles.”

This is the most recent and most accessible critique of AI hype (from Boomers AND Doomers) I’ve read so far. It’s a good starting point for people who are only familiar with individual tools and are being pressured into incorporating them into their professional (or personal) lives or have read about some of the various ways they’ve led to bad experiences for others. It’s also a good refresher for those who are already engaged and want another informed perspective.

The Intelligence Illusion: A practical guide to the business risks of Generative AI by Baldur Bjarnason

“I’ve never before experienced such a disparity between how much potential I see in a technology and how little I trust those who are making it.”

The first edition was my favorite read of 2023, and while I haven’t gotten to the second edition yet, I trust Bjarnason enough to believe it will not only retain its value — but very likely be even better. As I wrote in my review, it’s “accessible for anyone who’s spent more than 15 minutes with a clueless executive or myopic developer — or, frankly, engaged with any of the technological ‘disruptions’ of the past two decades — as Bjarnason rigorously unpacks the many risks involved with the most popular use cases being promoted by unscrupulous executives and ignorant journalists.”

Resisting AI: An Anti-Fascist Approach to Artificial Intelligence by Dan McQuillan

“When we’re thinking about the actuality of AI, we can’t separate the calculations in the code from the social context of its application. AI is never separate from the assembly of institutional arrangements that need to be in place for it to make an impact in society.”

Enlightening at times, but overwhelmingly academic, it reads like an “AI and Politics 401” textbook and was by far the least accessible of the books I’ve read, although it would probably go down a little easier if I’d read it after The AI Con. It was originally published before ChatGPT was unleashed on the world and AI was being aggressively pushed into everything, so while some of his proposed solutions seemed a little outdated or inadequate when I read it earlier this year, they are pretty similar to Bender and Hanna’s recommendations, most of which seemed a bit more realistic in an updated context.

Dark PR: How Corporate Disinformation Harms Our Health and the Environment by Grant Ennis

“Corporations use normalization to instill a sense of inevitability. When we fall for this frame we doubt that problems are problems and as a result, do not pursue solutions. Normalization preserves the status quo.”

This book actually has nothing to with AI, but Ennis does such an excellent job breaking down and illustrating the various ways corporations successfully manipulate and misdirect us in other areas — no matter how savvy we might individually think we are — that it might actually be the most relevant of my four recommendations here. Paired with any one of the above, you’ll come away with a much clearer perspective on why AI’s “inevitability” narrative needs to be questioned and resisted at every step, and how to cut through the bullshit hype from business executives, pundits, and journalists alike. His call for collective action is inspiring and pragmatic, but it’s hard not to think it might be too late — which is exactly what the people behind the AI hype are counting on.

Do you like email?

Sign up here to get my bi-weekly "newsletter" and/or receive every new blog post delivered right to your inbox. (Burner emails are fine. I get it!)

During last night’s insomnia-fest, I stumbled upon a Bluesky thread listing some of the most egregious ways in which the GenAI fever has already screwed up education, and the most terrifying aspect is that the post is from July 2024–almost a full year ago.

I keep thinking about how in the U.S. the percentage of functionally illiterate adults keeps increasing, and has been for a couple of decades (thank you, No Child Left Behind, and all the testing it engendered), and how the companies pushing GenAI (ChatGPT, Gemini ‘search’/Grammarly/fill in the blank), actually make a point of marketing to both students and teachers–“help your students develop their own writing voice–by using Grammarly!” “catch your cheating students by using the latest AI tools!” “get your homework done/essays written by Grammarly, so you have time for more important things!” “Save your mental energy, let GenAI write replies to your emails”–and more.

Hallucinating LLMs bouncing bullshit from one another in an endless loop, while entire generations of people grow up not knowing how to look up information in a fucking encyclopaedia, or how to verify the legitimacy of a source–never mind how to think through a problem on their own.

It’s fucking *terrifying*. Doctors. Engineers. Historians. Teachers. All trained by LLMs, entirely on bullshit.

AI in Education makes AI in Publishing look like a sad joke. Blindly embracing EdTech has already ruined at least two generations’ educational experiences, and AI is threatening to have an even worse impact. Ironically, it’s the one area where there’s already plenty of data and research proving it’s a bad idea (because “AI” ain’t actually new), and yet, here we are. 🙁